NAS

Neural Architecture Search (NAS) [49, 50, 32, 6, 42, 45] dominates efficient network design. From “MCUNet: Tiny Deep Learning on IoT Devices” by Lin, Ji, Wei-Ming Chen, Yujun Lin, John Cohn, Chuang Gan, and Song Han;

References:

Citations of NAS from “NeurIPS’20: MCUNet: Tiny Deep Learning on IoT Devices” by Lin, Ji, Wei-Ming Chen, Yujun Lin, John Cohn, Chuang Gan, and Song Han

- ICLR’17: Neural Architecture Search with Reinforcement Learning. Barret Zoph and Quoc V Le.

- CVPR’18: Learning Transferable Architectures for Scalable Image Recognition. Barret Zoph, Vijay Vasudevan, Jonathon Shlens, and Quoc V. Le.

- ICLR’19: DARTS: Differentiable Architecture Search. Haoxiao Liu, Karen Simonyan, and Yiming Yang.

- ICLR’19: ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware. Han Cai, Ligeng Zhu, and Song Han.

- CVPR’19: MnasNet: Platform-Aware Neural Architecture Search for Mobile. Mingxing Tan, Bo Chen, Ruoming Pang, Vijay Vasudevan, Mark Sandler, Andrew Howard, and Quoc V Le.

- CVPR’19: FBNet: Hardware-Aware Efficient ConvNet Design via Differentiable Neural Architecture Search. Bichen Wu, Xiaoliang Dai, Peizhao Zhang, Yanghan Wang, Fei Sun, Yiming Wu, Yuandong Tian, Peter Vajda, Yangqing Jia, and Kurt Keutzer.

- Ilija Radosavovic, Raj Prateek Kosaraju, Ross Girshick, Kaiming He, and Piotr Dollár. Designing network design spaces. arXiv preprint arXiv:2003.13678, 2020.

- Neural Arch Search – Lil’Log

- Neural Architecture Search: A Survey. Thomas Elsken, Jan Hendrik Metzen, Frank Hutter. Journal of Machine Learning Research, 2019.

- Blog: What’s the deal with Neural Architecture Search?

NAS overview

Reference:

- Neural Arch Search – Lil’Log

- Neural Architecture Search: A Survey. Thomas Elsken, Jan Hendrik Metzen, Frank Hutter. Journal of Machine Learning Research, 2019.

- Blog: What’s the deal with Neural Architecture Search?

Neural networks are hard to design.

Observation: The structure and connectivity of a neural network can be typically specified by a variable-length string. Therefore, it is possible to use a recurrent network – the controller – to generate such string.

NAS: a gradient-based method for finding good architectures.

NAS with reinforcement learning (ICLR’17): use a recurrent network to generate the model descriptions of neural networks and train this RNN with reinforcement learning to maximize the expected accuracy of the generated architectures on a validation set.

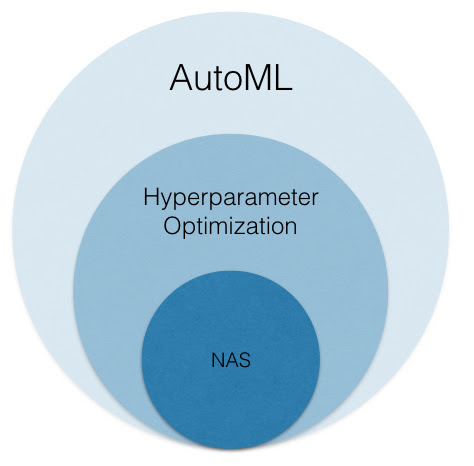

NAS a subproblem of Hperparameter optimization

Reference: Blog: What’s the deal with Neural Architecture Search?

AutoML focuses on automating every aspect of the machine learning workflow to increase efficiency and democratize machine learning so that non-experts can apply machine learning to their problems with ease. While AutoML encompasses the automation of a wide range of problems associated with ETL, model training, and model deployment, the problem of Hyperparameter Optimization is a core focus of AutoML. This problem involves configuring the internal settings that goven the behavior of an ML model/algorithm in order to return a high quality predictive model.

For example, ridge regression models require setting the value of a regularization term; random forest models require the user to set the maximum tree depth and minimum number of samples per leaf; and training any model with stochastic gradient descent requires setting an appropriate step size. Neural networks also require setting a multitude of hyperparameters including (1) Selecting an optimization method along with its associated set of hyperparameters, (2) setting the dropout rate and other regularization hyperparameters, and, if desired, (3) tuning parameters that control the architecture of the network (e.g., number of hidden layers, number of convolutional filters).

Although the exposition on Neural Architecture Search (NAS) might suggest that it is a completely new problem, our final example above hints at a close relationship between hyperparameter optimization and NAS. While search space used for NAS are generally larger and control different aspects of the neural network architecture, the underlying problem is the same as that addressed by hyperparameter optimization: find a configuration within the search space that performs well on the target task. Hence, we view NAS to be a subproblem within hyperparameter optmization.

NAS components

Reference: Blog: What’s the deal with Neural Architecture Search?, December 18, 2018

- The first NAS, Zoph et. al. 2016, requires a tremendous amount of computational power

- hundreds of GPUs running for thousands of GPU days in aggregate.

- Recent approaches exploit various methods of reuse to drastically reduce the computational cost.

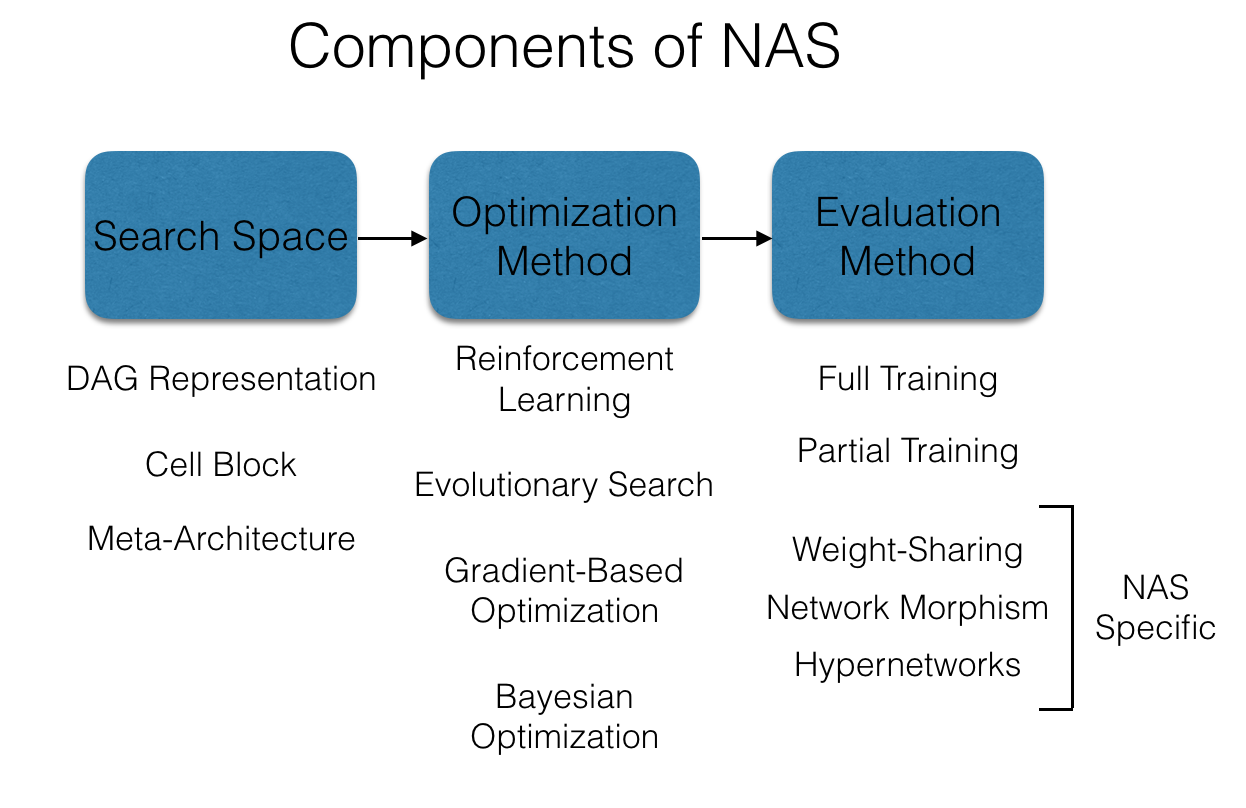

Three main components of most NAS methods (more on survey paper)

- Search space. This component describes the set of possible neural network architectures to consider. These search spaces are designed specific to the application, e.g., a space of convolutional networks for computer vision tasks or a space of recurrent networks for language modeling tasks.

- NAS methods are not fully automated, as the design of these search spaces fundamentally relies on human-designed architectures as starting points.

- The number of possible architectures considered in these search spaces are often over 10^10.

- Optimization method. This component determines how to explore the search space in order to find a good architecture.

- The most basic approach here is random search, while various adaptive methods have also been introduced, e.g., reinforcement learning, evolutionary search, gradient-based optimization, and Bayesian optimization.

- While these adaptive approaches differ in how they determine which architectures to evaluate, they all attempt to bias the search towards architectures that are more likely to perform well.

- Unsurprisingly, all of these methods have counterparts that have been introduced in the context of traditional hyperparameter optimization tasks.

- Evaluation method. Measures the quality of each architecture considered by the optimization method.

- Simplest, but most computationally expensive choice is to fully train an architecture.

- One can alternatively exploit partial training, similar in spirit to early-stopping methods commonly used in hyperparameter optimization like ASHA.

- an order-of-magnitude cheapter than full training.

- NAS-specific evaluation methods have also been introduced to exploit the structure of neural network to provide cheaper, heuristic estimates of quality.

- network morphsim

- weight-sharing

- hypernetworks

- 2-3 orders of magnitude cheaper than full training

NAS models vs human designed models

Public NAS Impelementations

A collection of NAS papers and codes:

ENAS: Efficient Neural Architecture Search via Parameter Sharing and Language Model Implementation of ENAS (google)

Learning Search Space Partition for Black-box Optimization using Monte Carlo Tree Search.

- LA-MCTS code (Facebook)

- From one-shot NAS to few-shot NAS

- From LaNAS to a generic solver LA-MCTS

- From AlphaX to LaNAS

- MCTS based NAS agent

- old AlphaX: AlphaX-NASBench101;

TF-NAS: Rethinking Three Search Freedoms of Latency-Constrained Differentiable Neural Architecture Search

Fair DARTS: Eliminating Unfair Advantages in Differentiable Architecture Search

Darts: Differentiable architecture search.

UNAS: Differentiable Architecture Search Meets Reinforcement Learning, CVPR 2020 Oral

Rethinking Performance Estimation in Neural Architecture Search

More

- 2017 ICLR Neural Architecture Search with Reinforcement Learning

- 2019 Survey

References: Neural Architecture Search with Reinforcement Learning. By Barret Zoph, Quoc V.Le. ICLR 2017. Overview Nerual networks are hard to design. In this paper, we use a recurrent network to generate the model descriptions of neural networks and train this RNN with reinforcement learning to maximize the expected accuracy of the generated architectures on a validation set. More

References: Neural Architecture Search: A Survey. Thomas Elsken, Jan Hendrik Metzen, Frank Hutter. Journal of Machine Learning Research, 2019. Deep Learning has enabled remarkable progress over the last years on a variety of tasks, such as image recognition, speech recognition, and machine translation. One crucial aspect for this progress are novel neural architectures. Currently employed architectures have mostly been developed manually by human experts, which is a time-consuming and error-prone process.

If you could revise

the fundmental principles of

computer system design

to improve security...

... what would you change?