2019 Rigorous

Q&A

Is there any bug that cannot (or hard to) be found without the formal model in the paper?

- In section VI, subtle bugs are found in CHERI ISA:

- CLC instruction: load data without permission check;

- bug in arch document and L3 model.

- legacy MIPS store allowed writing one byte past the region of the memory the code had permission to, and, if the code had access to the end of the address space, stores could write to the beginning of the address space. (In L3)

- unaligned MIPS loads allowed loading from a region of memory without permission. (In the L3 model and the Bluespec hardware impl.)

CBuildCapinstruction created a capability with the wrong base. (In L3)- Exception return (ERET) could access a system register (the EPCC) without permission. (In L3)

CCallFastinstruction, which invokes capabilities, exposed the unsealed code capability, breaking isolation between compartments that can invoke each other’s capabilities. (In L3)

What is the benefits of using fm?

- automate generated hardware validation tests, run before hw is implemented;

- clear to explain complex designs;

- assess whether the security properties are hold;

- over all executions of arbitrary code.

Is there any vendor in industry using similar tech?

What is the relationship between the verification here and the verification in the EDA (Electronic Design Automation)?

References:

- Rigorous enginieering for hardware security: formal modelling and proof in the CHERI design and implementation process, by Kyndylan Nienhuis, Alexandre Joannou, Anthony Fox, Micheal Roe, Thomas Bauereiss, Brian Campbell, Matthew Naylor, Robert M. Norton, Simon W. Moore, Peter G. Newmann, Ian Stark, Robert N. M. Watson, Peter Sewell. UCAM-CL-TR-940.

Overview

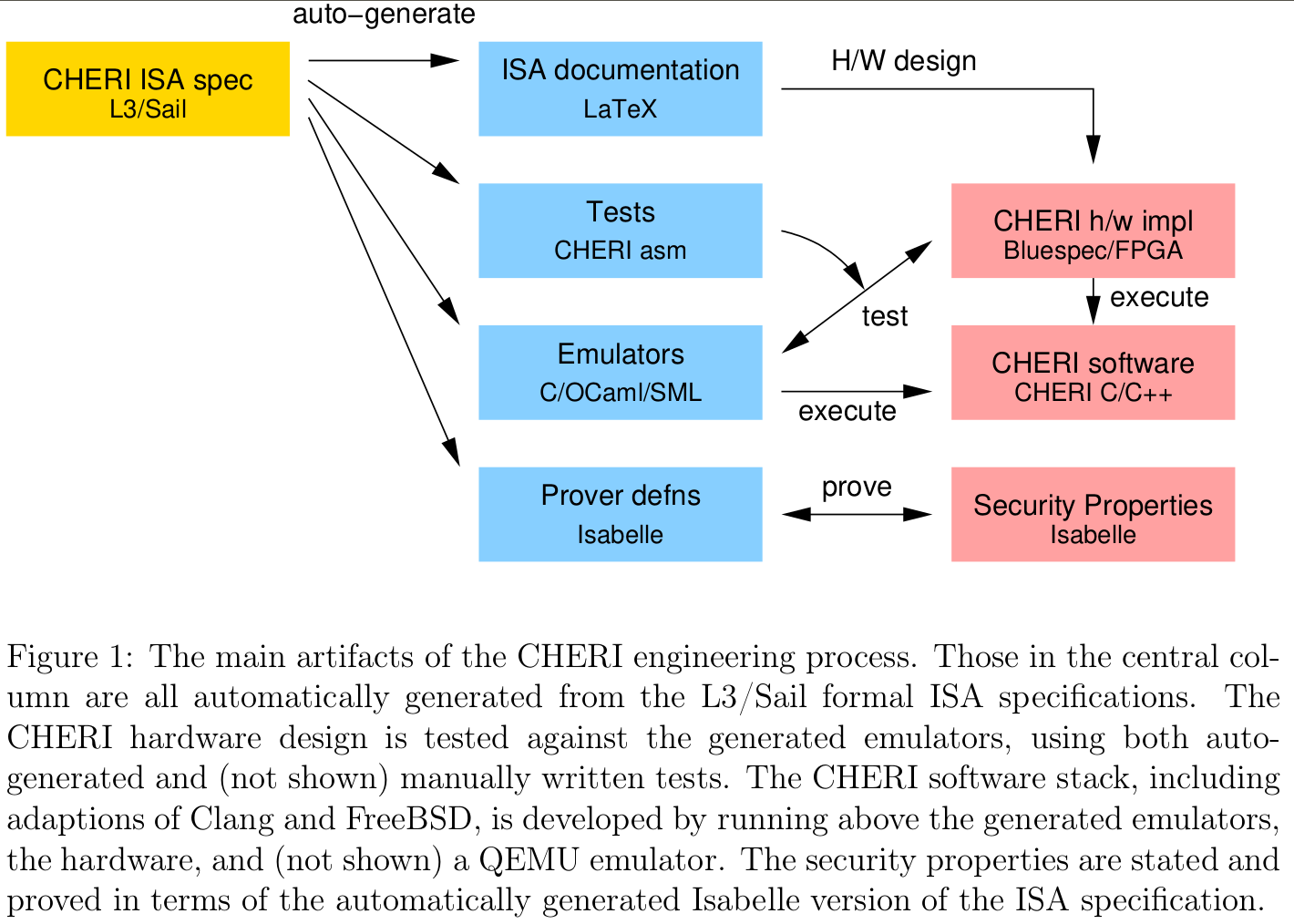

We use formal models of the complete instruction-set architecture (ISA) at the heart of the design and engineering process, both in lightweight ways that support and improve normal engineering practice – as documentaion, in emulators used as a test oracle for hardware and for running software, and for test generation – and for formal verification.

We formalise key intended security properties of the design, and establish that these hold with mechanised proof. This is for the same complete ISA models (complete enough to boot operating systems), without idealisation.

We do this for CHERI, an architecture with hardware capabilities that supports fine-grained memory protection and scalable secure compartmentalization, while offering a smooth adoption path for existing software.

prose/pseudocode ISA descriptions ==> rigorous definitions in (L3/Sail), on software/hardware interface.

security properties are stated precisely and formally in relatively simple terms, using basic operations over sets and quantified formulas. (Isabelle)

security properties are mathematically proved. (Isabelle)

Backgroud

Two fundamental problems for memory safety bugs:

- mainstream hardware architectures and languages (dating back to 1970s, C/C++) only support coarse-grained memory protection, via MMU.

- mainstream systems are typically developed using test-and-debug engineering methods.

- good to build systems that are sufficiantly functionally correct under normal use;

- but fail to build secure systems, where an easily missed corner case could be actively found by attackers, and could be used to compromise the entire system.

CHERI’s two foundamental principles:

- Principle of least privilege.

- C/C++ objects;

- Javascript engine vs. SSL connection keys.s

- Principle of intentional use. A program clearly states which permission it uses to authorise its action.

- avoid the confused deputy problem5.

- e.g. OS vs user processes. More restricted permissions on the user space buffer for OS code.

- “For example, an operating system may need to hold permission to access the entirety of a user process(e.g. for paging), but when that process makes a read() system call, passing a pointer to a user-space buffer, CHERI lets the relavant OS code to use the more restricted permission to just that buffer.”

CHERI’s work

- a bluespec fpga hardware implementation, and

- a software stack above it, adapting LLVM and FreeBSD to CHERI-MIPS/RISC-V/ARM.

- extensive work on:

- the interaction between the capability system and systems aspects of memory management(static and dynamic linking, process creation, context switching, page swapping and signal delivery) 6;

- the overhead of compiling pointers to capabilities 7 8;

- the compartmentalization of legacy software 9;

- the performance overhead of the tagged memory 10;

- the performance overhead of the protection-domain switches 11;

Lightweight rigorous engineering

UCAM-CL-TR-940, chapter 3.

Goal: simultaneously enabling formal statement and proof of security properties of their [security, architecture, and operating systems researchers and developers] actual design, not just some idealised model thereof. To improve their engineering practice in multiple lightweight ways, with immediate benefits long before the formal proof was complete:

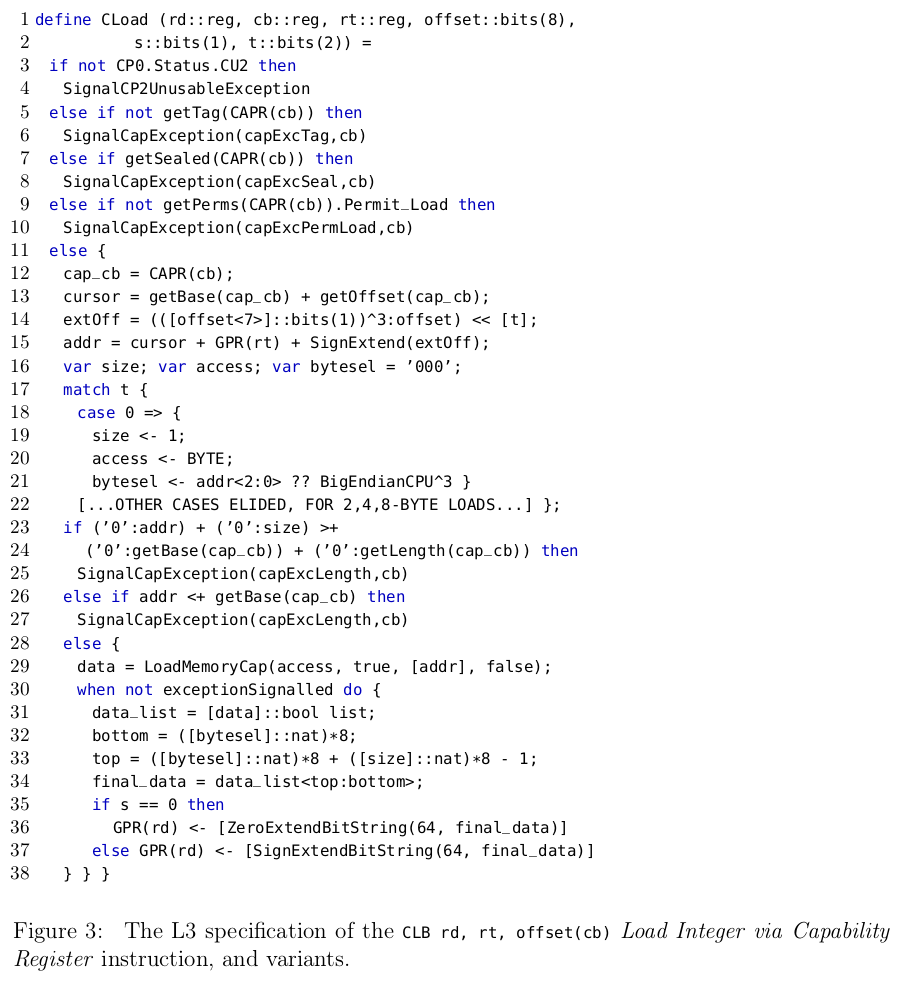

Use L3 and Sail ISA Models:

- to replace informal pseudocode for ISA design specifications.

- to automatically generate emulators as oracles for testing the Bluespec FPGA hardware implementation;

- to routinely bring up new software above the executable formal models while helping to narrow down the source of any bugs with ease.

- to automatic generate tests with authoritative formal models.

- One can simply compare hardware vs model running arbitrary code, so can be automated.

- To generate random sequences of instructions that achieve good coverage in the presence of a large number of security tests in the capability instructions, it was important to control which instructions could generate a processor exception and why. We used a combination of symbolic execution of the L3 specification and automatic constraint solving[^c35] to find an initial processor state where only the chosen instruction would fault.

Industrial processor architecture specifications:

- AMD64, IBM POWER, Intel 64, MIPS, RISC-V, and SPARC;

- define their envelops of programmer-visible allowed behaviour with documents containing a mix of prose and pseudocode.

- typically multi-thousand-page books containing masses of detail, about instruction behaviour, encodings, address translation, interrupts, etc.

- NOT computational artefacts, so vendors also develop internal “golden” reference models to use as oracles for hardware testing, often in conventional programming languages such as C++.

- ARM, exceptionally, have recently transitioned to a machine-processed pseudocode, so what is in their manual can actually be tested against 12.

CHERI’s architecture specifications:

- started off in 2011 with traditional pseudocode descriptions, together with experimental formal modeling (unpublished) of key instructions in PVS13.

- In 2014, starting the work on complete formal models in the L3 [^c32] Instruction Description Language (IDL).

- More recently in Sail 14.

L3 and Sail:

- both strongly typed, first-order imperative language.

- aim to be accessible to engineers without a formal background.

- build a complete model includes everything that is necessary to boot an operating system, including exceptions, the translation lookaside buffer (TLB), and the programmable interrupt controller(PIC).

- parse and type-check their input, immediately catching errors that are easy to make in non-mechanised pseudocode specifications.

- allow no ambiguity.

Sail differs from L3:

- mainly in providing a rich but decidable type system;

- with lightweight dependent types to let computed bitvector lengths be statically checked.

both around 7k lines of specification.

Stating architectural security properties

UCAM-CL-TR-940, chapter 4.

Formal vs Prose security properties:

- Prose properties are prone to ambiguities; Formal ones can help to identify and resolve these ambiguities.

- Prose properties are hard to prove; Formal ones stated in L3 and Sail can be automatically exported to theorem prover (Isabelle/HOL, HOL4, Coq).

Example of ambiguity: the capability monotonicity

- Prose documentation: “new capabilities must be derived from existing capabilities only via valid manipulations that may narrow (but never broaden) rights ascribed to the original capability.”

- ambiguity: What is “broadening the rights of a capability”? Broadening bounds? Unsealing a capability?

- Prose documentation: “controlled violation of monotonicity can be achieved via the exception delivery mechanism […] and also by the CCall instruction”.

- ambiguity: What is the definition of “controlled violation”?

- Prose documentation: “monotonicity allows reasoning about the set of reachable rights for executing code, as they are limited to the rights in any capability registers, and inductively, the set of any rights reachable from those capabilities”.

- ambiguity: It does not define when a right is reachable from a capability, so one cannot know exactly what the upper bound of the rights is.

Security property definitions for CHERI:

- Define an order over capabilities to clarify what “broadening the rights of a capability” should mean;

- Define an abstraction of CHERI-MIPS with abstract actions for each type of memory access and capability manipulation, capturing the intentions of CHERI-MIPS instructions by mapping them onto these actions.

- for each action we state under what conditions it can be performed, and what effects it has.

- Amongst other things, this precisely states the effects of instructions that can broaden the rights of a capability, clarifying what “controlled violation of capability monotonicity” should mean.

- It also states properties that have no prose counterparts in the CHERI documentation, but that are nonetheless crucial to the capability system.

Characterize which capabilities a (potentially compromised) compartment could access or construct if it is allowed to execute arbitrary code.

- which part of memory and which registers the compartment can overwrite, capturing the “reachable rights for executing code”.

For application cases, we state what assumptions need to be satisfied in order to isolate a compartment from the rest of the program, and which guarantees CHERI-MIPS then offers.

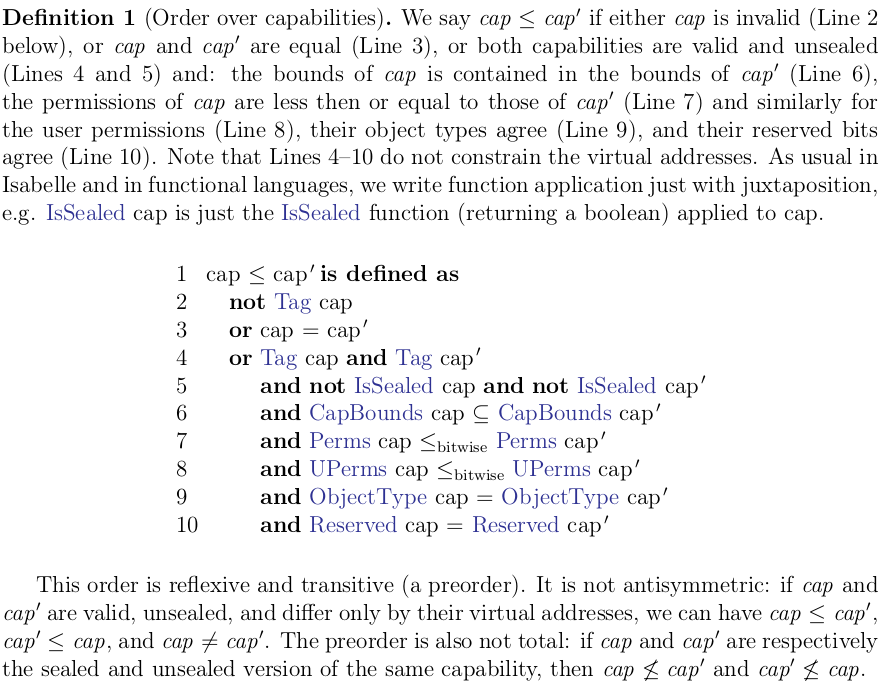

Capability order

$\le$:

- The authorities of sealed and unsealed capabilities are incomparable even if they have the same bounds and permissions.

- unsealed capability can authorize memory accesses but the sealed capability cannot, while the sealed capability can be invoked (with the right permissions) but the unsealed one cannot.

- Invalid capabilities have no authority.

- Sealed capabilities are immutable while they stay sealed.

- Unsealed capabilities can be restricted by shrinking their bounds or removing their permissions.

- the virtual address of unsealed capabilities can be changed to any value without affecting the authority.

This leads to the following definition.

Abstract Actions

Define properties about the effects of a single execution step, abstracting from the detailed behaviour of CHERI-MIPS instructions defined by L3 (or Sail) model.

Goals:

- Capture the principle of intentional use by mapping instructions onto abstract actions;

- Define an abstract action for

- each kind of memory accesses: loading data, storing data, loading cap, storing cap.

- each kind of cap manipulation: restricting, sealing, unsealing, invoking.

- hardware exceptions.

- Abstract actions contain some extra information, for example:

- the register index of the capability that is used as authority (if applicable)

- 180 instructions -> 9 abstract actions.

- abstract away from many details but

- retain the ability to define different security properties for different intentions.

- Define security properties that are strong enough to imply more complex security properties (reachable capabilities, compartment isolation)

- define invariants about

- address translation,

- kernel mode, and

- the exception-control ‘CP0’ registers

- define properties that describe what happens when a certain abstract action is not intended.

- e.g. if the instruction does not intend to store anything to an address

a, then the memory atashould remain unchanged. (???assuming no concurrent; no physical misfunction)

Action examples, non-domain-crossing:

LoadDataAction, parameters:reg auth, the register of the capability that is used as authority;reg a, the physical address of the data;l, the length of the data that is loaded.

StoreDataAction, parameters:LoadCapActionreg authreg a, physical address of the capability;reg r, the destination register.

StoreCapAction, parameters:reg authreg r, the source registerreg a, physical address of the destination

RestrictCapActionreg r, the source registerreg r', the destination register where a restricted version of the source is copied to.

SealCapActionreg authreg r, the source registerreg r', the destination register where a sealed version of the source is copied to

UnsealCapAction.reg authreg rreg r'

Domain crossing actions:

RaiseException, no parameter.InvokeCapability, parameters:reg r, code capabilityreg r', data capability

SwitchDomain, parameter:Action a, an action that yields the execution to another domain.

KeepDomain, parameter:actions, a set of actions that continue the execution in same domain.

Mapping instructions to actions:

- The

CSeal cd, cs, ctinstruction maps toKeepDomain {SealCapAction ct cs rd}

Actions refers to physical memory, but the instructions refer to virtual memory. When mapping these instructions, we therefore translate the addresses. (??? Correctness Verifiable??? Does the translation has the equal effects with the real translation in the full system deployment???)

Instructions that load capabilities map to both a LoadCapAction and a LoadDataAction, because they can indirectly be used to load data (for example, by loading data into a capability register and inspecting the fields of the capability).

Define security properties for the abstraction

For each action, define a property that states the prerequisites and effects of that action.

These are properties of an arbitrary CHERI-MIPS ISA semantics sem, which

we later prove hold of the actual semantics.

Property 2: Restricting capabilities. The simplest, requiring only the the resulting capability is less than or equal to the original.

RestrictCapProp sem is defined as

for all s s' actions r r'.

if StateIsValid s

and (KeepDomain actions, s') $\in$ sem s

and RestrictCapAction r r' $\in$ actions

then CapReg s' r' $\le$ CapReg s r An ISA semantics sem satisfies the RestrictCapProp property only when, for all states s s', actions, registers r, r':

- state

sis valid; - execution step from

stos'with the intention to keep the domain, while perform a number of actions; - one of the actions is

RestrictCapAction r r'; CapReg s' r'$\le$CapReg s r: the less than or equal cap order.

Property 3: Storing data. To store data, one needs a valid, unsealed capability with the PermitStore permission, and the physical addresses stored to should correspond to virtual addresses that lie within the bounds of the capability.

StoreDataProp sem is defined as

for all s s' actions auth a ln.

if StateIsValid s

and (KeepDomain actions, s') \in sem s

and StoreDataAction auth a ln \in actions

then Tag (CapReg s auth)The semantics sem satisfies the StoreDataProp property if the following holds:

StateIsValid s:sis a valid state;(KeepDomain actions, s')$\in$sem s: consider an execution stepstos'with the intention to keep the domain and perform a num of actions.StoreDataAction auth a ln$\in$actions: assumeStoreDataAction auth a lnis one of the actions.Tag(CapReg s auth): the capabilityauthhas a tagnot IsSealed(CapReg s auth):authis not sealedPermitStore(CapReg s auth):authhasPermitStorepermissionln != 0: the lenghlnof the stored segment is not zero- `

Reachable Capabilities

Build a Compartment (User Space)

Proving the architectural security properties

Mechanised all our proofs in Isabelle15.

Automated proof methods, tailored to L3 specifications. Use Eisbach [^c41], an extension of Isabelle’s proof language.

- Our custom tactics can automatically prove the security properties for most CHERI-MIPS instructions that do not directly interact with the capability mechanisms.

Use python scripts to generate the statements and proofs of many lemmas.

Evaluation - Bugs found

with CHERI-MIPS.

Six Bugs found by proof work:

CLCinstruction: load cap withPERMIT_LOAD_CAPABILITY; could load even if no perm, but loaded without cap tag. In arch document and L3 model.- Legacy MIPS store: write pass one byte; could pass after the end of address space and write to the beginning. In L3.

- unaligned MIPS loads allowed loading from a region of memory without permission. In L3 model and the Bluespec impl.

CBuildCapinstruction: create cap with wrong base. In L3.ERET: access a system register (EPCC) without permission. In L3.CCallFast: invokes capabilities; exposed unsealed code capability, breaking isolation between compartments that can invoke each other’s capabilities. In L3.

From L3 to Sail

Sail generates emulators in OCaml and C, as well as theorem prover definitions for Isabelle/HOL, HOL4, and Coq.

Sail definitions can be integrated with multicore relaxed memory models.

Sail models: CHERI-MIPS, ARMv8-A, RISC-V, CHERI-RISC-V.

Cons

Cannot yet verify CHERI as a whole “is secure”:

- Does not prove the hardware implementation correct with respect to the architecture;

- Does not prove correctness or security properties of system software above the architecture;

- Cannot prove about side-channel information flow via timing behaviour or power consumption;

- A loose defined architecture to admit variations: Cannot prove about architecturally visible information flow: a (compromised or adversarial) hardware implementation could leak information while conforming to this loose architecture specification.

- do exclude architecturally visible capability flow.

- Only covers the uniprocessor case.

of processors with ISA-Formal,” in International Conference on Computer Aided Verification. Springer, 2016, pp. 42–58.

More

- A. Reid, R. Chen, A. Deligiannis, D. Gilday, D. Hoyes, W. Keen, A. Pathirane, O. Shepherd, P. Vrabel, and A. Zaidi, “End-to-end verification ↩

- A. Reid, “Trustworthy Specifications of Arm v8-A and v8-M system Level Architecture,” in Proceedings of Formal Methods in Computer-Aided Design (FMCAD 2016), October 2016, pp. 161–168. [Online]. Available: https://alastairreid.github.io/papers/fmcad2016-trustworthy.pdf ↩

- A. Reid, “Defining interfaces between hardware and software: Quality and performance,” Ph.D. dissertation, School of Computing Science, University of Glasgow, March 2019. ↩

- A. Reid, “Who guards the guards? Formal validation of the Arm v8-M architecture specification,” Proceedings of the ACM on Programming Languages, vol. 1, no. OOPSLA, p. 88, 2017. ↩

- N. Hardy. “The confused deputy (or why capabilities might have been invented),” ACM SIGOPS Operating Systems Review, vol. 22, no. 4, pp 36-38, 1988. ↩

- B. Davis, R. N. Watson, A. Richardson, P. G. Neumann, S. W. Moore, J. Baldwin, D. Chisnall, J. Clarke, N. W. Filardo, K. Gudka et al., “CheriABI: Enforcing valid pointer provenance and minimizing pointer privilege in the POSIX C run-time environment,” University of Cambridge, Computer Laboratory, Tech. Rep., 2019. ↩

- J. Woodruff, R. N. Watson, D. Chisnall, S. W. Moore, J. Anderson, B. Davis, B. Laurie, P. G. Neumann, R. Norton, and M. Roe, “The CHERI capability model: Revisiting RISC in an age of risk,” in ACM/IEEE 41st International Symposium on Computer Architecture, ISCA 2014, Minneapolis, MN, USA, June 14-18, 2014, 2014, pp. 457–468. ↩

- J. Woodruff, A. Joannou, H. Xia, B. Davis, P. G. Neumann, R. N. M. Watson, S. Moore, A. Fox, R. Norton, and D. Chisnall, “Cheri concentrate: Practical compressed capabilities,” IEEE Transactions on Computers, 2019. ↩

- K. Gudka, R. N. Watson, J. Anderson, D. Chisnall, B. Davis, B. Laurie, I. Marinos, P. G. Neumann, and A. Richardson, “Clean application compartmentalization with SOAAP,” in Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security. ACM, 2015, pp. 1016–1031. ↩

- A. Joannou, J. Woodruff, R. Kovacsics, S. W. Moore, A. Bradbury, H. Xia, R. N. Watson, D. Chisnall, M. Roe, B. Davis et al., “Efficient tagged memory,” in Computer Design (ICCD), 2017 IEEE International Conference on. IEEE, 2017, pp. 641–648. ↩

- R. N. Watson, R. M. Norton, J. Woodruff, S. W. Moore, P. G. Neumann, J. Anderson, D. Chisnall, B. Davis, B. Laurie, M. Roe et al., “Fast protection-domain crossing in the CHERI capability-system architecture,” IEEE Micro, vol. 36, no. 5, pp. 38–49, 2016. ↩

- A. Reid, R. Chen, A. Deligiannis, D. Gilday, D. Hoyes, W. Keen, A. Pathirane, O. Shepherd, P. Vrabel, and A. Zaidi, “End-to-end verification of processors with ISA-Formal,” in International Conference on Computer Aided Verification. Springer, 2016, pp. 42–58. ↩

- “PVS specification and verification system,” http://pvs.csl.sri.com/. ↩

- A. Armstrong, T. Bauereiss, B. Campbell, A. Reid, K. E. Gray, R. M. Norton, P. Mundkur, M. Wassell, J. French, C. Pulte, S. Flur, I. Stark, N. Krishnaswami, and P. Sewell, “ISA semantics for ARMv8-A, RISC-V, and CHERI-MIPS,” in POPL 2019: Proc. 46th ACM SIGPLAN Symposium on Principles of Programming Languages, 2019. ↩

- T. Nipkow, L. C. Paulson, and M. Wenzel, Isabelle/HOL: A Proof Assistant for Higher-Order Logic. Springer, 2012. ↩

If you could revise

the fundmental principles of

computer system design

to improve security...

... what would you change?