2019 Survey

References:

- Neural Architecture Search: A Survey. Thomas Elsken, Jan Hendrik Metzen, Frank Hutter. Journal of Machine Learning Research, 2019.

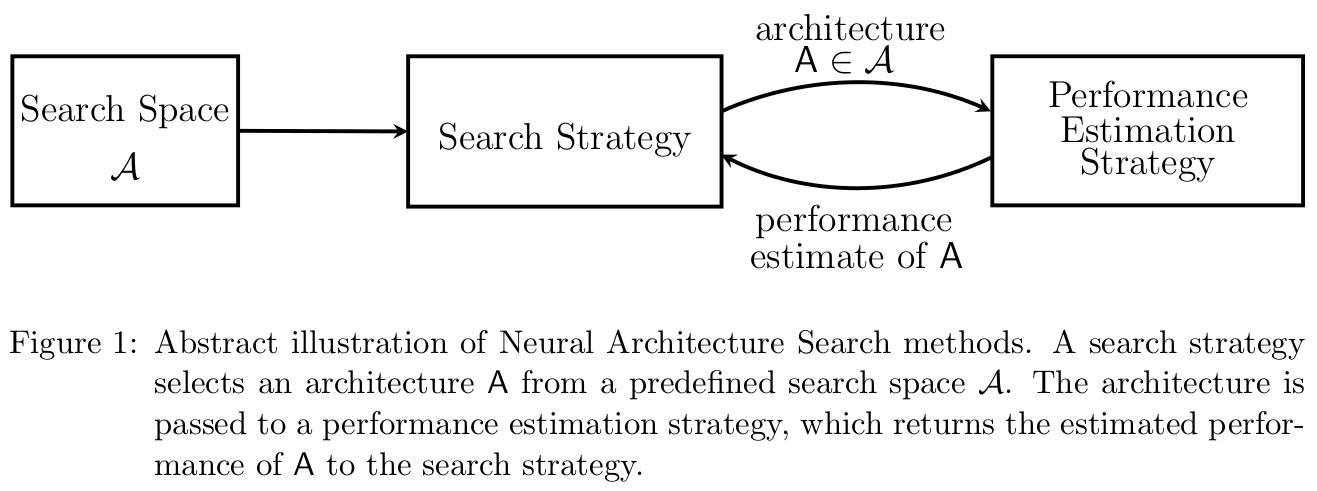

Deep Learning has enabled remarkable progress over the last years on a variety of tasks, such as image recognition, speech recognition, and machine translation. One crucial aspect for this progress are novel neural architectures. Currently employed architectures have mostly been developed manually by human experts, which is a time-consuming and error-prone process. Because of this, there is growing interest in automated neural architecture search methods. We provide an overview of existing work in this field of research and categorize them according to three dimensions: search space, search strategy, and performance estimation strategy.

Already by now, NAS methods have outperformed manually designed architectures on some tasks such as image classification (1; 2, object detection (1), or semantic segmentation (3)

- Search Space $\mathcal{A}$. Defines with architecture can be represented in principle.

- Incorporating prior knowledge about typical properties of architectures well-suited for a task can reduce the size of the search space and simplify the search;

- But will introduce human bias, which may prevent finding novel architectural building that go beyond current human knowledge.

- Search Strategy. Details how to explore the search space (which is often exponentially large or even unbounded).

- It encompasses the classical exploration-explitation trade-off

- find well-performing architectures quickly

- premature convergence to a region of suboptimal architectures should be avoided.

- Performance Estimation Strategy.

- Simplest form is to perform a standard training. But computationally expensive.

- Method that reduce the cost of these performance estimations.

$A \in \mathcal{A}$

More

- Learning transferable architectures for scalable image recognition. In Conference on Computer Vision and Pattern Recognition, 2018. ↩

- Large-scale evolution of image classifiers. by Esteban Real, Sherry Moore, Andrew Selle, Saurabh Saxena, Yutaka Leon Suematsu,Quoc V. Le, and Alex Kurakin. ICML, 2017. ↩

- Searching for efficient multi-scale architecturesfor dense image prediction. By Liang-Chieh Chen, Maxwell Collins, Yukun Zhu, George Papandreou, Barret Zoph, FlorianSchroff, Hartwig Adam, and Jon Shlens. IN S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors,Advances in Neural Information Processing Systems31, pages 8713–8724. Curran Associates, Inc 2018. pdf ↩

If you could revise

the fundmental principles of

computer system design

to improve security...

... what would you change?